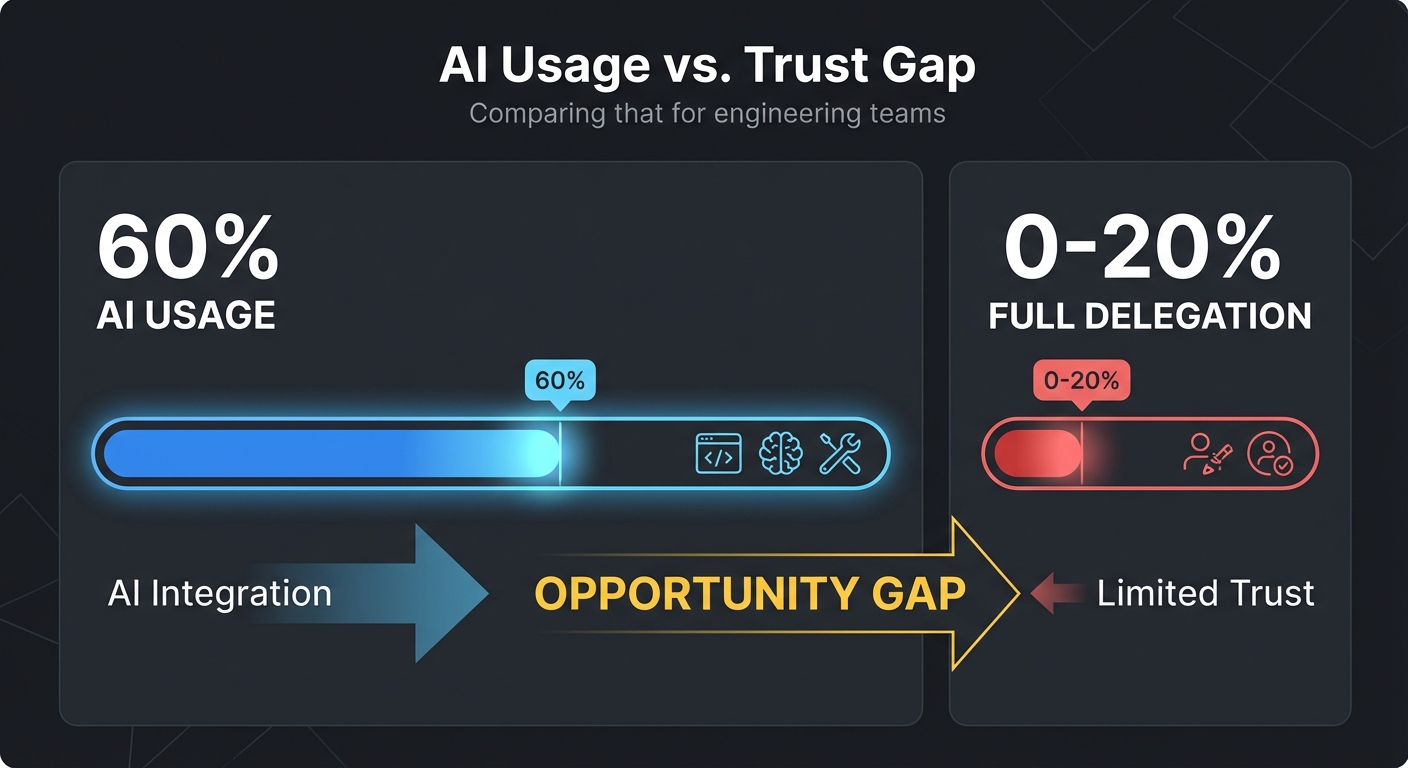

TL;DR: Andrej Karpathy just coined "agentic engineering" as the successor to vibe coding. Anthropic's 2026 report shows engineers use AI in 60% of their work but only fully delegate 0-20%. That gap is where engineering management becomes critical. Here's a practical framework for leading your team through the transition.

The future of engineering management: orchestrating AI agents, not writing code.

Your engineers are already using AI for 60% of their work.

That's not a prediction. That's the finding from Anthropic's 2026 Agentic Coding Trends Report, published this week. And here's the number that should keep every engineering manager up at night: those same engineers report fully delegating only 0-20% of their tasks to AI.

Think about that gap. Your team uses AI constantly but trusts it with almost nothing.

Meanwhile, Andrej Karpathy — the same person who coined "vibe coding" exactly one year ago — just named its successor: agentic engineering. In his words: "The new default is that you are not writing the code directly 99% of the time. You are orchestrating agents who do and acting as oversight."

Orchestrating. Acting as oversight. Sound familiar?

That's literally what engineering managers do every day. And that's why this shift makes your role more valuable, not less — if you know how to lead through it.

Subscribe for Engineering Leadership Tips

The Usage-Trust Gap Is Your Biggest Opportunity

Let's unpack that 60% usage / 0-20% delegation number, because it reveals something important about where your team actually is.

What your engineers are doing with AI right now: - Code completion and autocomplete (the baseline everyone's adopted) - Debugging assistance and error interpretation - Writing tests and documentation - Boilerplate generation

What they're NOT doing: - Delegating full feature implementation to AI agents - Running multi-agent workflows across parallel context windows - Letting AI make architectural decisions - Trusting AI-generated code without line-by-line review

The usage-trust gap: your team uses AI constantly but delegates almost nothing.

The gap between "using AI as a fancy autocomplete" and "orchestrating AI agents to build features autonomously" is enormous. And closing that gap isn't a technical challenge — it's a management challenge.

Your engineers aren't under-utilizing AI because they don't know how. They're under-utilizing it because nobody has created the trust framework, quality gates, and team norms that make delegation safe.

That's your job.

Why Engineering Managers Become More Valuable

Here's the counterintuitive truth: in a world where AI writes most of the code, the people who manage how code gets written become more important than ever.

You Already Have the Core Skill

Agentic engineering is fundamentally about orchestration — breaking complex problems into delegatable tasks, maintaining quality standards across multiple contributors, and making judgment calls about what needs human attention vs. what can be automated.

You've been doing this with human engineers for years. The agent layer just adds new instruments to your orchestra.

The New Skills Gap Is a Management Problem

Karpathy explicitly states that agentic engineering "is something folks can learn and improve at." The skills it demands — prompt engineering, context management, task decomposition, output validation — are learnable.

But someone needs to teach them. Someone needs to set the standards. Someone needs to decide which engineers pilot the advanced workflows and which tasks get delegated first.

That someone is you.

Quality at Scale Requires Oversight Architecture

Anthropic's report documents that AI agents can now complete 20 autonomous actions before requiring human input — double what was possible six months ago. That's impressive, but it also means the blast radius of an unsupervised mistake has doubled.

Engineering managers are uniquely positioned to design the oversight architecture: when does an AI contribution need human review? What are the quality gates? How do you maintain code quality standards when half the commits are AI-generated?

These aren't questions individual contributors should be answering alone.

The Three Skills Every Engineering Manager Needs Now

1. Prompt Engineering Leadership

You don't need to be the best prompt engineer on your team. But you need to understand it well enough to set standards and recognize when your team is doing it poorly.

What this looks like in practice:

- Establish team prompt standards. Just like you have coding conventions, you need prompting conventions. How should engineers describe architectural context to AI? What level of detail produces the best results? Create templates for common workflows.

- From code review to prompt review. When an engineer's AI-generated PR has issues, the root cause is often a poor prompt, not a poor model. Train yourself to trace quality problems back to their prompting source.

- Create a shared prompt library. Your senior engineers will develop effective prompts for common tasks. Capture those. Share them. Iterate on them as a team. This is institutional knowledge that compounds.

Quick win: In your next team meeting, ask each engineer to share their most effective AI prompt. You'll immediately see who's sophisticated and who's still at the "write me a function" stage.

2. Agent Oversight and Quality Control

The Anthropic report documents organizations running specialized agents in parallel across separate context windows. Multi-agent coordination is becoming standard. Your job is to make sure it doesn't become chaos.

What this looks like in practice:

- Define delegation boundaries. Not everything should be delegated to AI. Critical security code, complex architectural decisions, and novel problem-solving still need human brains. Create clear guidelines for your team about what's AI-appropriate.

- Build AI-aware CI/CD pipelines. Your deployment pipeline should have extra validation steps for AI-generated code. Static analysis, expanded test coverage requirements, maybe even AI-assisted code review of AI-generated code. Layer the safety nets.

- Establish human checkpoints. For multi-agent workflows, define the moments where a human must review before proceeding. Think of it like approval gates in a release process — but for AI work sessions.

Quick win: Audit your team's last 20 PRs. How many included AI-generated code? What was the defect rate compared to human-written code? You probably don't know these numbers. Start tracking them.

3. Workforce Orchestration

This is the hardest skill because it's the most human. Your team is going through a fundamental change in how they work, and they have feelings about it.

What this looks like in practice:

- Manage the adoption curve. Some engineers will embrace agentic workflows immediately. Others will resist. Both reactions are valid. Your job is to create space for experimentation while maintaining delivery expectations.

- Rethink performance metrics. An engineer who uses AI effectively to ship 3x more features isn't necessarily a better engineer than one who writes elegant code by hand. But they might be more valuable to the business. You need to figure out what "good" looks like in this new world.

- Watch for psychological impacts. Engineers who spent years mastering their craft may feel threatened when an AI agent produces similar code in minutes. Senior engineers might resist delegation because their identity is tied to being "the person who writes the code." These are real management challenges that require empathy and skill.

- Create growth paths that include AI. "Learn to orchestrate AI agents effectively" should be a legitimate growth objective in your team's development plans. Engineers who master this early will be disproportionately valuable.

Quick win: In your next 1:1 round, ask each engineer: "What percentage of your coding work do you currently delegate to AI? What would you need to double that?" The answers will tell you everything about where your team stands.

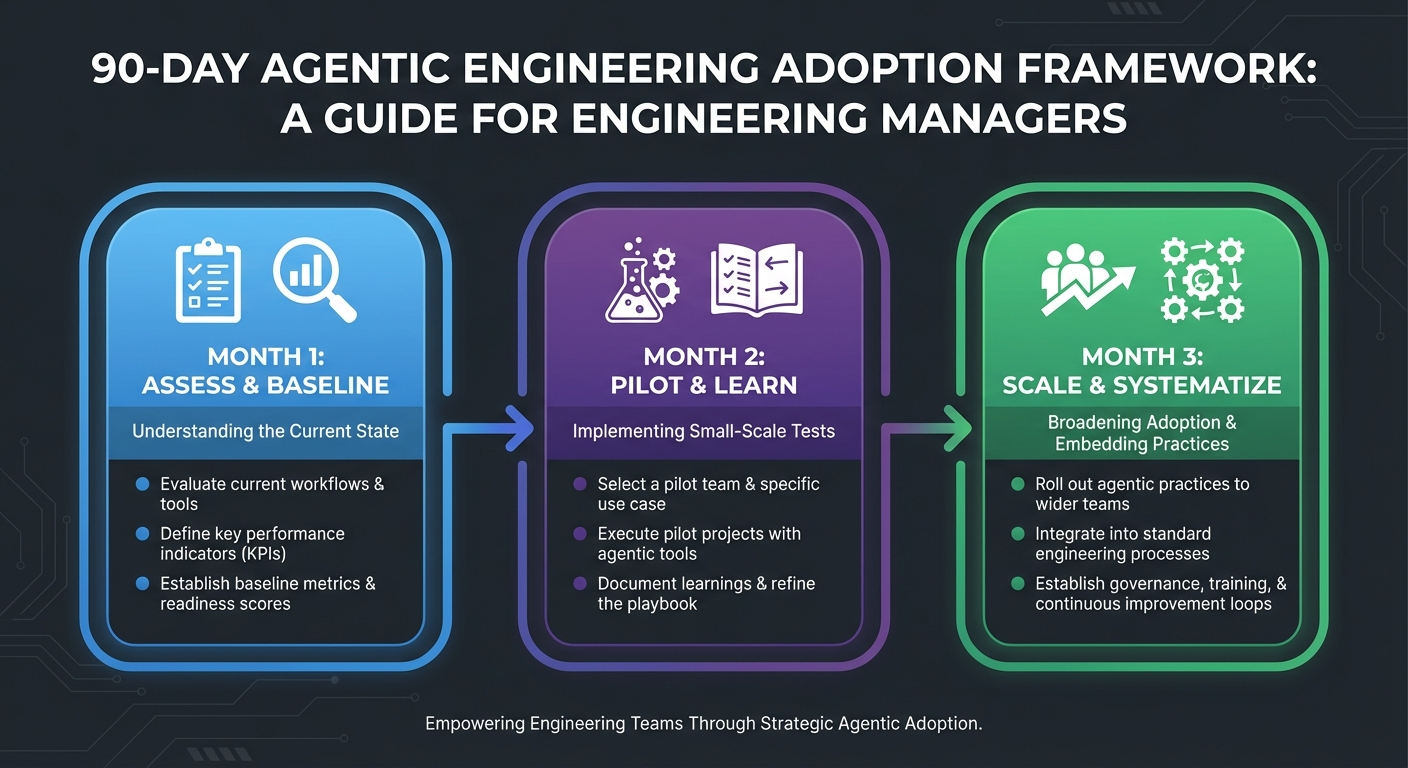

A Practical 90-Day Framework

A practical 90-day framework for rolling out agentic engineering on your team.

Month 1: Assess and Baseline

Week 1-2: Audit current state - Survey your team's AI usage (tools, frequency, what they delegate) - Identify your AI champions (engineers already pushing boundaries) - Map the trust gaps (what are engineers unwilling to delegate, and why?)

Week 3-4: Set baselines - Track AI vs. human contributions in your codebase - Measure current cycle time, defect rate, and deployment frequency - Document your team's existing AI workflows and tools

Deliverable: A one-page "AI Adoption Snapshot" for your team. Where you are, where the gaps are, and what's blocking progress.

Month 2: Pilot and Learn

Week 1-2: Launch a pilot - Select 1-2 high-trust engineers for advanced AI delegation experiments - Choose a well-defined project with clear success criteria - Set up enhanced monitoring (extra code review, test coverage requirements)

Week 3-4: Build the playbook - Document what works and what doesn't from the pilot - Create prompt templates based on successful patterns - Draft initial delegation guidelines (what AI handles vs. humans)

Deliverable: A team playbook with prompt templates, delegation guidelines, and quality gates based on real results — not theory.

Month 3: Scale and Systematize

Week 1-2: Expand to the full team - Roll out piloted workflows to all engineers - Run a team workshop on prompt engineering and agent orchestration - Deploy AI-aware CI/CD enhancements

Week 3-4: Embed in processes - Update your 1:1 templates to include AI adoption check-ins - Add AI delegation skills to growth plans and performance criteria - Create a feedback loop for continuous improvement

Deliverable: Updated team processes that treat AI orchestration as a core competency, not an afterthought.

Tools Your Team Should Be Evaluating

The agentic engineering ecosystem is evolving fast. Here's what's worth your attention right now:

| Category | Tools | Why It Matters |

|---|---|---|

| AI Coding Agents | Claude Code, GitHub Copilot, Cursor | The primary interface between your engineers and AI |

| Agent Frameworks | LangChain, CrewAI, AutoGen | For building custom multi-agent workflows |

| Quality & Testing | AI-assisted code review, expanded static analysis | Safety nets for AI-generated code |

| Monitoring | Track AI vs. human commits, delegation rates | You can't manage what you can't measure |

The key insight from the Anthropic report: organizations like Zapier have achieved 97% AI adoption across their entire company — not just engineering. The tools are ready. The question is whether your team's processes are.

The Five Mistakes I See Engineering Managers Making

1. Assuming all engineers will adopt at the same pace. They won't. Your senior architect who takes pride in hand-crafted code will need a different approach than your mid-level engineer who's already using Claude Code for everything. Meet people where they are.

2. Not updating performance metrics. If you're still measuring engineers purely on lines of code or story points completed, you're incentivizing the wrong things. Create metrics that reward effective orchestration, not just output volume.

3. Treating AI as a tool instead of a team member. This sounds weird, but it matters. AI agents that run multi-step workflows need task descriptions, context, and quality expectations — just like a junior engineer would. Engineers who treat AI as a "fancy autocomplete" will never unlock its full potential.

4. Over-delegating without oversight architecture. The excitement of 3x productivity can lead teams to skip quality gates. Resist this. The first major AI-generated production incident on your team will set adoption back months. Build the safety nets first.

5. Ignoring the human side. Engineers have feelings about this transition. Some feel threatened. Some feel excited. Some feel both. Don't pretend it's purely a technical challenge. Have the conversations. Acknowledge the complexity.

The Bottom Line

Andrej Karpathy described agentic engineering as "orchestrating agents who [write code] and acting as oversight." Replace "agents" with "engineers" and you've described engineering management.

The shift from implementation to orchestration isn't a threat to your role. It's a validation of it.

But only if you're proactive. The engineering managers who wait to see how this plays out will find themselves managing teams that are falling behind. The ones who build the frameworks, set the standards, and lead the transition will be the most valuable leaders in their organizations.

The 60% usage / 0-20% delegation gap is closing. The question is whether your team closes it intentionally — with quality, oversight, and a plan — or chaotically.

You already know how to orchestrate. Now you just have more instruments to work with.

Start with one engineer. One use case. One month. Then scale what works.

If you enjoyed this article, subscribe to get notified of upcoming article on Engineering and AI